Apple’s WWDC 2025 keynote is just days away, and expectations are high. This year’s announcements are rumoured to include a dramatic visual overhaul—complete with rounded icons on the Home screen and a more unified interface across macOS and iOS. Apple may also introduce a new naming scheme for its operating systems, and its AI effort, Apple Intelligence, is expected to see improvements after a lukewarm debut last year.

But beyond new names and fresh polish, disabled people are asking the same question they do every June:

Will this be the year Apple finally fixes the accessibility gaps we live with every day?

Despite its deserved reputation as a leader in accessibility and inclusive design, Apple continues to leave significant issues unresolved. As I’ve written in The Register over the past two years —first about Voice Control, then about the Apple Watch—these aren’t edge-case frustrations. They’re fundamental shortcomings in how Apple’s ecosystem serves disabled people.

WWDC 2025 is a chance to put that right.

macOS: Fix Voice Control or fall further behind

When Apple launched Voice Control in 2019, it marked a huge leap forward for disabled people who can’t use the keyboard. For the first time, we could control the Mac entirely with our voice. But six years on, the cracks are painfully clear—and I laid them out in The Register in 2023.

Voice Control still strips all formatting when pasting text. That means if I use a custom command to insert my professional email signature, it arrives as plain Helvetica, no links, no bold, no structure—like I’m emailing from a PalmPilot in 2002.

There’s no way to insert rich text, no clipboard memory, no persistent app context. And unlike Nuance Dragon, Voice Control doesn’t adapt or learn from corrections—so the same recognition errors keep happening day after day. Over time, this not only undermines accuracy but chips away at productivity, forcing disabled people to repeat commands, rephrase sentences, or manually fix mistakes that should have been learned and avoided.

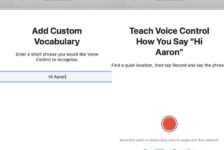

The app doesn’t even allow you to quickly re-record misheard custom vocabulary without diving into clunky menus.

Yes, Apple added custom vocabulary recording in macOS 15—something I long campaigned for. It’s a welcome step. But it’s still not enough.

At WWDC 2025, Apple must rebuild Voice Control as a serious productivity and independence tool:

• Support formatted text commands

• Introduce clipboard controls

• Give Voice Control a memory so dictation accuracy improves over time

• Let us re-record entries more easily

• And make Voice Control work reliably across apps, not just in Apple’s own.

iOS: If AI is coming, it must serve everyone

We know iOS 26 will include more on-device intelligence; which might mean more conversational interactions. That’s exciting.

Even Apple’s own Shortcuts app, which could empower disabled people to automate tasks, remains confusing and inaccessible to many. For some, it requires too much technical skill to use without assistance.

When Apple is eventually able to introduce Siri as an AI-powered assistant, it must not forget accessibility. This means:

• Building natural voice chaining into Siri, including building on support for those with atypical speech

• Enabling app-agnostic control

• Creating a beginner-friendly Shortcut builder with assistive support

• And ensuring any AI upgrade works hands-free, always.

Apple Watch: The wearable that still demands touch

In August 2024, I wrote a widely shared piece for The Register on Apple Watch accessibility, and the response from disabled readers was overwhelming: I’m not alone in my frustration.

Despite being worn on the wrist, the Apple Watch remains stubbornly reliant on physical interaction. I still can’t:

• Wake Siri without wrist acrobatics I can’t perform

• Swipe a notification hands-free

• Check health metrics and summon help hands-free in an emergency

Even basic Siri tasks often require the wrist to be raised or the screen to be touched—rendering it useless in bed, on a ventilator, or during moments of fatigue or paralysis.

Compare that to Meta’s smart glasses, which let me send messages or play music using voice alone, hands-free. They’re not perfect, but they’re moving in the right direction.

Apple’s watch is a stunning device—but it needs to serve disabled people too. At WWDC 2025, we need:

• Full Siri functionality without screen taps and wrist raise

• Voice-based app control

• And the ability to dismiss or action alerts entirely by voice

Conclusion: Accessibility must lead—not lag behind

Apple often does accessibility better than most—but that’s no excuse to stop pushing. Many of us rely on their ecosystem not just by choice, but because there are few alternatives. When features like Voice Control or Siri fail, our independence suffers.

WWDC 2025 is a chance to right those wrongs—not just with new features, but by fixing the ones we already have.

If AI is the future of Apple, it must be an accessible one.

If the Apple Watch is wearable tech, it must be usable hands-free.

If Voice Control exists, it must evolve.

I’ll be watching—literally and figuratively. I hope Apple is listening.