It’s a year since Apple first unveiled its flagship accessibility feature called Voice Control at WWDC 2019. With an inspiring short film the company showed Ian McKay, a disability advocate and outdoor enthusiast, using voice commands to control his Mac computer.

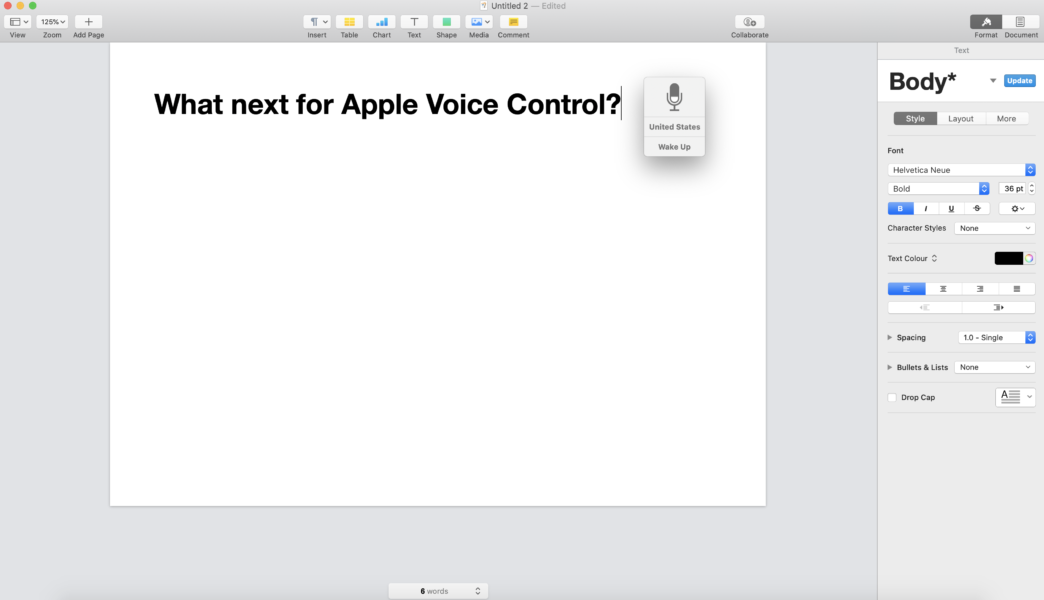

Voice Control is a speech to text application that is now baked into Apple devices and offers physically disabled people, and anyone who owns a Mac computer, iPhone or iPad the ability to precisely control, dictate and navigate by voice commands alone.

I’ve been trying to use the application over the past year and have been left feeling frustrated and disappointed. With the next version of macOS set to be unveiled later this month at WWDC 2020 here’s why and what Apple needs to do next to improve Voice Control.

Please note there are differences in the way Voice Control performs on macOS, iOS and iPadOS but this article focuses on how it performs on Mac computers.

1) Works in some places but not others

Apple has claimed in some of its publicity for Voice Control that you can easily dictate into any text box. Unfortunately, this is not the case.

Dictation does not work in every text box when accessing websites via Safari. For example, Whatsapp Web works great but dictating into WordPress, Google Search and Google Translate doesn’t provide a good dictation experience. Accuracy is poor and there are no spaces inputted between dictated words. There will be many other text boxes on other websites that also don’t play well with Apple’s speech recognition engine.

In the next version of macOS Apple will need to ensure web services designed for Safari meet accessibility standards if Voice Control dictation is to be a success. I’ve been speaking to experts over the past year and it’s my understanding the issues stem from the fact Safari has its own rendering engine and Apple ultimately controls what can and can’t be done on its browser so for the most part, it is an Apple responsibility to make sure their browser is more accessible to developers. However, there are occasions where websites use non standard methods to create text fields, which prevent voice dictation from working. Essentially, it’s nobody’s and everybody’s fault.

I have raised this issue with Apple and it’s my hope that the company’s Voice Control and Safari development teams have achieved better cohesion now, and have been working with the wider community to improve Voice Control dictation capabilities on the web.

2) USA only

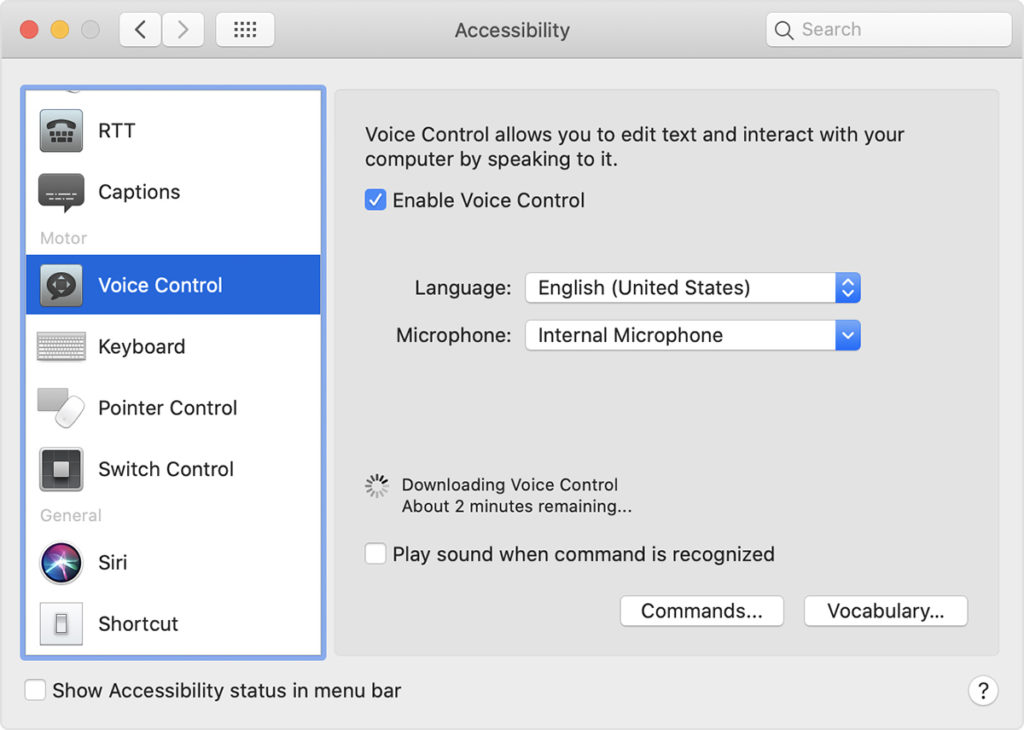

Voice Control uses the Siri speech-recognition engine to improve on the Enhanced Dictation feature available in earlier versions of macOS. The problem for me, as a UK English speaker, is that it currently offers higher quality Siri speech-recognition engine dictation in U.S. English only. Other languages and dialects use the speech-recognition engine previously available with Enhanced Dictation, which is inferior.

It has been more than frustrating to see numerous updates to macOS over the past 12 months and no other languages have been added. Only when the Siri speech-recognition engine supports UK English will levels of accuracy in the words I dictate improve and Voice Control will perhaps finally become a productive tool to use.

3) Word training

Voice Control has a vocabulary, which can be customised to enable users to teach the application new words allowing you to create your own vocabulary. However, the problem is that if you use work jargon, or you have friends with foreign names, Voice Control will never understand those correctly. For example, I have many Polish friends, even if I add their names as custom words in vocabulary Voice Control still fails to recognise and dictate their names correctly.

Nuance’s Dragon Professional Individual for Windows 10 deals with this problem by allowing the user to voice train any word when it is added to custom vocabulary, which ensures that the same recognition error doesn’t happen time after time. Apple needs to add this ability to train words.

There is also a problem when you ask the application to correct a word. It comes up with a list of options with a number against each one but rarely do I find the word I’m looking for in the list of options displayed. This suggests to me that the application’s powers of recognition are limited.

Accuracy is everything for people with severe disabilities. If there is an error in recognition when dictating people like me can’t take to the keyboard and simply carry on. Poor recognition and constant errors leads to fatigue and frustration.

4) Microphone setup and optimisation

For the best performance when using Voice Control with a Mac Apple advises users to use an external microphone. Do not rely on the internal microphones on MacBooks because the recognition accuracy will be woeful. I use a high quality USB table microphone instead. Apple needs to focus more on microphones and the important role they play in improving the accuracy of dictation especially in noisy environments and those with poor acoustics. The company could optimise its Airpods product for dictation on the Mac but it would need to increase their battery life for this to work effectively. In comparison, Nuance spends a lot of time advising on microphones, provides microphone calibration settings in its software, and even rates different microphones for performance. Apple says very little about the role of microphones and accurate dictation. It needs to say and do more in this arena going forward.

macOS 10.16 and Voice Control

It would be great to see Apple iron out all these issues and shortcomings in Voice Control in the next version of macOS because the company has a torturous history when it comes to speech to text technology. Nuance Dragon had a Mac version of their leading Windows product up until October 2018 when it abruptly dropped out of the Mac platform. It was said by one senior Nuance executive at the time that this was because of frustrations with how Apple’s API restrictions left the company unable to implement some of the features it was able to offer for its Windows version.

When Voice Control was launched a few months later many were excited at the prospect of having native voice recognition capabilities baked into Apple devices. However, as it has turned out it’s a sad indictment of Voice Control that as a severely physically disabled person I don’t use Voice Control at all because of the poor level of recognition accuracy. Instead, Apple has left me with no option but to run a virtual machine on my MacBook Pro, which allows me to run Windows 10 and Nuance’s Dragon Professional for dictating my emails, documents, social media posts and WhatsApp messages. Whilst Dragon does everything Voice Control does it also does a lot more leading to higher levels of dictation accuracy, and productivity. As a long-time loyal Apple consumer it’s a constant source of disappointment that I have to turn to Windows to get things done.

It should be noted that Apple bundles in Voice Control for free in its various software platforms, whereas Nuance charge £350 for Dragon Professional Individual software to run on Windows computers. I would happily pay Apple for a professional voice recognition product that worked with the same capabilities and accuracy as Nuance’s product does. Baking in what some may arguably call mediocrity for free does not do anyone any favours in terms of extending accessibility. It just leads to frustration and disappointment, and holds back education, careers, and online social engagement.

It looks like this year Apple will be going big on mental health but let’s hope they still have new things to offer people living with severe physical disabilities. I’ll be reviewing what changes, if any, Apple makes to Voice Control when the next version of Mac OS is unveiled at WWDC later this month but, for now, I want to hear your suggestions. When it comes to Voice Control what improvements do you want to see in 2020?

Serina

Thank you. brilliant article as always. Apple seem be set on continuing to advertise there in efficient, frustrating, and fundamentally flawed”Voice control”. Which as you say, scarcely helps severely disabled people, The American English being the exclusive language is a big one, curiously, they speak of it being the native language on there website, not sure why but miss leading information makes it even more difficult for disabled people.

I should print out the —OK going for a four letter word hair: VAKIST fast rasta Mantz VAKIST fast VAAAST amount of grammatical error’s are Apple, but imagine you know that using it to yourself. Wow it kind of blows my mind how impossible it is to write the most simple comment, or even sentence. Thank you for writing this. I think what a pool a poor apple Apple Apple Apple doing is actually offensive. OK Apple leave it in then, hi did actually tried to edit that out but it is taking forever. And it’s indecipherable!