Ahead of its annual Worldwide Developers Conference (WWDC) on the 6th of June Apple has previewed updated accessibility features coming to its platforms later this year.

Among the software updates with the potential to change lives are LiDAR-powered Door Detection for blind or visually impaired users, Live Captions for deaf people or those with hearing loss, and features which give those with physical and motor disabilities the ability to control the Apple Watch via an iPhone.

It is the second year in a row Apple has previewed accessibility updates ahead of its annual cycle of more general software updates, which this year will be unveiled at WWDC next week.

This is what Apple has announced, with particular interest to disabled people with physical and motor impairments, as well as some questions that are yet to be answered by the company.

Voice Control upgrade

Voice Control, Apple’s speech recognition app for disabled people with physical impairments, is frustrating to use at the moment. On the one hand, it is great for opening and closing applications, and more generally navigating the Mac desktop with just your voice. But when it comes to accurate speech recognition it disappoints.

The application is riddled with bugs and shortcomings including the way it handles grammar and pronouns, works in some text boxes but not others, and is just not very smart with the same dictation errors happening repeatedly. In its current form there is very little evidence that any meaningful machine learning is being used to enhance the user experience.

However, a sign that Apple may be about to show Voice Control some long overdue love and attention this year is Voice Control Spelling Mode, which is a new feature that will give users the option to dictate custom spellings using letter-by-letter input.

It is something that is standard in voice dictation applications like Dragon Professional on Windows computers, and has been a glaring omission in Voice Control since its launch three years ago.

As someone with a physical disability, who has problems touching the keyboard to clear up dictation errors, Spelling Mode should be a boon for dictation accuracy and productivity, and great for typing out email IDs and usernames in text boxes.

In theory, Spelling Mode should mean that when you dictate the names of foreign friends, whose names are not in Voice Control’s vocabulary, the application will recognise the names, and the same basic errors will not keep repeating. It should be the same for any other pronouns that you use as part of your day-to-day vocabulary.

However, there is a slight concern that Voice Control Spelling Mode may need to be used every time you use a custom spelling such as with pronouns it doesn’t recognise. Apple hasn’t said.

If Spelling Mode stores and remembers your preferred custom spelling it is going to be a massive gain for productivity.

As a Brit, I am disappointed to note in the small print Spelling Mode will only be available for US English at launch later this year. Hopefully, Apple will not make users with UK English, and other languages, wait too long to make use of this exciting new feature.

Watch accessibility improvements

Last year’s May accessibility preview brought AssistiveTouch for Apple Watch a feature that provides a way to perform gestures to control the Apple Watch for those with physical and motor disabilities.

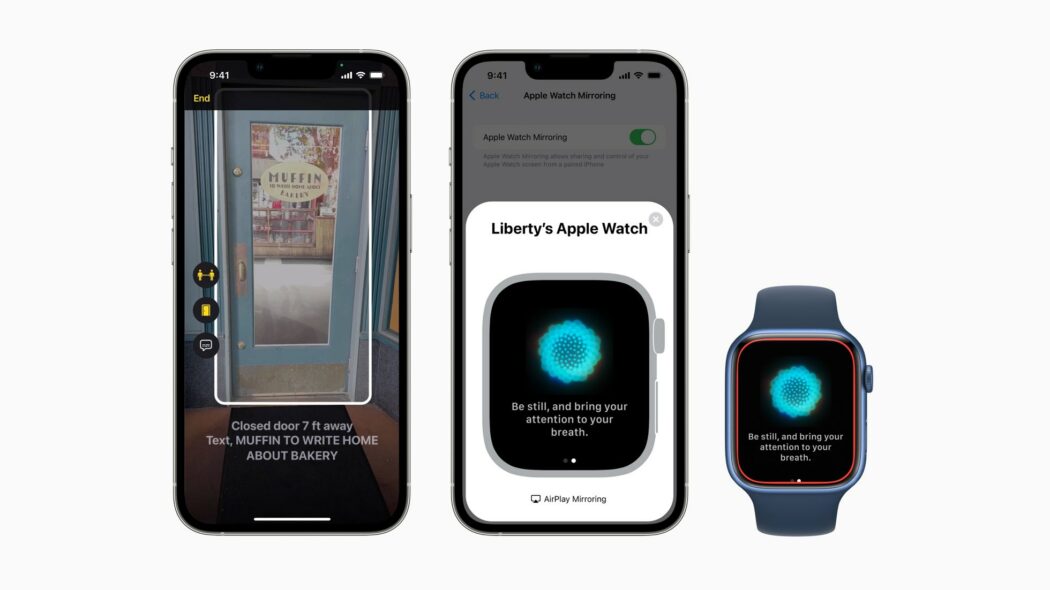

This year, Apple is bringing more Watch gestures, which the company are calling Quick Actions, and a new feature called Apple Watch Mirroring.

With Quick Actions on Apple Watch, the company says a double-pinch gesture can answer or end a phone call, dismiss a notification, take a photo, play or pause media in the Now Playing app, and start, pause, or resume a workout.

I am disappointed I cannot use any of the AssistiveTouch gestures launched last year; such as pinch, clench etc. Apple did not make them sensitive enough for the level of muscle power in my hands and fingers. At this stage I am not overly optimistic the new Quick Actions will be any better. No pun intended but fingers crossed they are, (and perhaps that’s a new gesture Apple will consider!).

Apple Watch Mirroring enables users to control the Apple Watch remotely from a paired iPhone. With Apple Watch Mirroring, the company says users can control Apple Watch features like blood oxygen and heart rate using iPhone’s assistive features like Voice Control and Switch Control, and use inputs including voice commands, sound actions, head tracking, or external Made for iPhone switches as alternatives to tapping the Apple Watch display.

It will be interesting to see how helpful Mirroring will be but I would like Apple to boost its voice assistant Siri’s capabilities as a way of extending access for disabled people with upper limb impairments. For example, the company should offer a Siri voice command for taking on-demand blood oxygen measurements with the Apple Watch “hey Siri take a blood oxygen measurement”. At the moment Siri can open the blood oxygen app on the Watch but can’t actually trigger the “start taking a measurement” process.

I am still not sure how helpful, and most of all spontaneous, the new Mirroring feature will be. For example, if I am out with just my Watch, and no iPhone, can I run a blood oxygen measurement with just my voice, handsfree? I don’t think so but as someone who uses a ventilator part of the day there maybe reasons why I might want to check how my blood oxygen handsfree without my iPhone while away from home.

There are interesting parallels between the way blind people need to use the Apple Watch and the way severely physically disabled people with limited or no upper limb movement need to use it.

I need assistive tech so I can control and use the iPhone and Watch as if I am “blind”. I can’t raise my wrist and hands to bring the iPhone screen, or Watch face, into view. Make the Watch and iPhone more accessible to blind people and you make it more accessible to people with my kind of physical motor disability, and vice versa. I don’t think to date Apple has fully grasped that there are a lot of people wanting to use the Watch and iPhone completely “blind” with just their voice.

Siri Pause Time adjustment

With Siri Pause Time, Apple will give users with speech disabilities the ability to adjust how long Siri waits before responding to a request. Having a little extra time to complete your thought before Siri tries to act on it could make it a more useful tool for both disabled people, and perhaps the elderly.

When will we be able to try the new features?

Apple says all these new accessibility features are coming “sometime later this year” via software updates. However, Aaron Zollo, a prominent iOS YouTuber, has said some of them could come sooner, in later betas of iOS 15.6 or MacOS 12.5 beta. Failing that we will surely get to see them in iOS 16 unveiled soon on 6th June.

Disabled people will be hoping that these new tools for navigation, health, communication, and more on Apple devices will, in various ways, transform lives by making devices like the iPhone, iPad, Apple Watch and Mac more accessible than ever before.