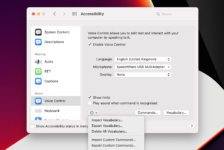

Full, fantastic, life-changing…this was one of the gushing headlines about Apple’s new Voice Control feature after it was announced at the tech giant’s annual Worldwide Developers Conference (WWDC) on 7 June. Voice control offers physically disabled people, and anyone who owns a Mac computer, iPhone or iPad the ability to precisely control, dictate and navigate their devices by voice commands alone.

The company’s new accessibility feature was unveiled with an inspiring short film. In it, a man in a wheelchair — Ian Mackay, a disability advocate and outdoor enthusiast — issued voice commands to a Mac computer. With little delay, the computer did as it was told.

So far so good for anyone who wants to control their devices with only their voice. However, with little over a month until the final release of iOS 13 and macOS Catalina it doesn’t look as though everything is going smoothly with regards to the development of Voice Control.

Apple has stopped users leaving feedback on Voice Control and dictation in its public beta program Feedback Assistant. Users were able to leave feedback earlier in beta process but not now.

The company briefly removed the ability to add words to vocabulary in Voice Control dictation in macOS Catalina public beta. The option was greyed out in earlier betas. Without this ability Voice Control will be a inferior product and practically unusable. In early beta versions it has not worked as well as expected. Custom vocabulary is also not synced across devices.

Apple’s dictation does not work in every text box in Safari e.g. Whatsapp works great but WordPress and Google Translate don’t provide good and accurate dictation. There will be many other websites, too many to mention, that won’t play well with Apple dictation. Apple will need to ensure web services designed for Safari meet accessibility standards if Voice Control dictation is to be a success.

Ijjaz Ahmed a respected developer explained: “Chrome, FireFox and Safari have their own rendering engine and Apple ultimately control what can and can’t be done in their browsers so for the most part, it is an Apple responsibility to make their browser more accessible to developers. On the other side there are occasions where websites use non standard methods to create text fields which prevent voice dictation from working. Essentially, it’s nobody’s and everybody’s fault.”

“Apple’s Voice Control and Safari development teams need to have better cohesion and work with the wider community to improve voice control on the web”, Ahmed added.

It is going to be a huge ask of Apple to get Voice Control to be as impressive, slick, productive and accurate across it’s platforms as the memorable film and presentation at its Worldwide Developers Conference in June.

Over the summer I have been trying iOS 13 public beta on my iPhone, and macOS Catalina public beta on my MacBook Pro.

When it comes to accessibility by voice the early signs are that Voice Control is a big step forward on both iPhone and MacBook. For example, for navigation the “show numbers” feature is useful for quickly and easily navigating devices. Open messages, open photos, scroll up, scroll down, select 2 provides liberating and impressive navigation of the screen.

However, some significant concerns remain that Voice Control will not live up to the hype. While the software is still in a beta testing phase, and this should rightly be acknowledged, it is only about a month until the final release and there are still significant barriers to access by voice, generally, on Apple devices.

You still can’t hang up a phone call either by a Siri speech command, or by creating a custom command in Voice Control. This is very disenfranchising and inconvenient for people who find it tricky to touch the phone screen. It is particularly disappointing Apple hasn’t addressed this in its development of Voice Control considering people have been feeding back to the company on this issue for some time. It is possible to end calls with a voice command on some Android phones.

You still can’t turn auto-answer phone calls on and off by a voice command – either by Siri or a custom Voice Control command. Auto-answer is a core accessibility and phone function that is useful for answering calls automatically if you can’t touch the screen but while you can turn other phone functions on and off by voice commands e.g. Bluetooth, the torch, Voice Control itself etc this most important of accessibility features can’t be toggled on and off by voice commands. How ironic is that.

Announce Messages with Siri is a new feature coming to iOS 13 this autumn. Apple does not bill it as a accessibility feature but by enabling anyone with Airpods or Powerbeats Pro earbuds to have their messages read out to them by Siri automatically, and the ability to transcribe with a reply, it clearly has a lot of use for people who have difficulty interacting with their iPhone screen. The feature appeared in early beta versions this summer but for some reason Apple has removed it in the latest version of iOS 13 public beta 5. Let’s hope it returns soon.

As we near Apple’s September event and the announcement of the new iPhone, the beta process is starting to slow down. Apple is now generally focusing on performance improvements, as well as bug fixes rather than making new features. However, the company will occasionally cave in to customer demand for new features during the beta testing process of its software. For example, last week it introduced the ability to toggle the new dark mode feature on and off by Siri in iOS 13 beta 5. Why it can’t do the same for auto answer remains a mystery.

Formula One car or skateboard

It is with accuracy of dictation that Voice Control really falls down the most at the moment. Dictation, transcribing spoken words into text on the page, is an important part of Voice Control. It is certainly the feature people use most for corresponding and keeping in touch. After using dictation in Apple’s Voice Control in macOS Catalina public beta I have had to revert back to the leading but costly Dragon Professional speech recognition software running on Windows 10 in Parallels on my MacBook Pro. In my somewhat enforced and custom set up, Dragon operates on the same hardware, microphone and audio environment as Voice Control but is streets ahead in terms of accuracy and productivity. As someone observed recently it’s like comparing a Formula One car with a skateboard. Apple’s dictation application is just not stable, accurate or productive at the moment.

For a company that traditionally produces stuff that just works, and often comes late to the show with a device or service but smashes it by making their own offering so much better than what is available elsewhere in the market, it is strange to see Apple dictation so inferior to Dragon Professional for Windows in its present form, albeit still in beta. It is almost completely unusable for people who rely on speech recognition for corresponding.

I am guessing Apple have to develop things from scratch, so the same product evolution Nuance Dragon went through some 20 years ago Apple will have to go through now with Voice Control. Since technology evolves faster nowadays, they won’t have to spend as much time as Nuance Dragon, which didn’t become highly accurate until 2012, which is quite a far bit away from its initial launch in 1997.

Siri being far newer is still very accurate considering how recent it is. It still only works well for short phrases because Apple spent those years working on it just for short phrases. In the last 12 – 24 months they’ve just started working on voice in general so you can say they started this in 2017 and in 2 years they’ve come quite a long way.

However, the current performance of dictation is nowhere near accurate enough for folk like me. Try composing a short work email of a few paragraphs using Voice Control and it is a very frustrating experience.

I hope Apple can improve Voice Control considerably before final release in little over a month from now. In its present form I have serious doubts. I write as someone who really wants the company to succeed with Voice Control, and voice access generally across devices. It’s a critical time in its development. I hope Apple is listening to feedback, which I am leaving them (or was until they removed the option from their Feedback Assistant).

If dictation is not productive people will find it frustrating and not use it. Fatigue is an issue many severely physically disabled people experience and if you are constantly having to correct words due to poor recognition this can be draining. After trying dictation in macOS Catalina public beta it is not possible to recommend it at the moment.

The inclusion of Voice Control across its devices is one of the biggest pro-accessibility moves Apple has ever made but with a month to go until final release it is very late in the software development cycle to influence the company to get their prestigious accessibility offering spot on for severely physically disabled people, and indeed anyone who wants to control their devices by voice. At the moment it is far from it.

Sue Kearney

I understand this article is old, but I’m asking anyway…. I’m having such a hard time finding much at all about my question.

I’ve used Voice Control on my macbook pro, and find that it runs really really slow, noisy, and hot when I do so.

I’m about to buy my next (and last, I think) Mac. And I want to figure out what I need in terms of processing speed and memory to allow me to use Mac accessibility features and still have nimble response time from the computer.

Apple sales reps haven’t really been able to answer my questions.

Any suggestions for me?

Thanks!