Technology

Ray-Ban Meta smart glasses must become true smart home controllers

Ray-Ban Meta smart glasses are often presented as content tools — devices for creators capturing everyday moments. That framing is far too narrow. If Meta is serious about ambient computing and long-term mass adoption, then Ray-Ban Meta smart home control must become central to the product’s evolution – first by voice, and ultimately through the EMG Neural Band being developed in collaboration with the University of Utah. This is not about novelty. It is about autonomy. I have argued repeatedly on Aestumanda that the glasses should integrate with smart home systems — including the ability to open my own front door hands-free. The case has only grown stronger. From lifestyle accessory to assistive infrastructure A recent LinkedIn post from Bob Carter, CEO of University of Utah Health...

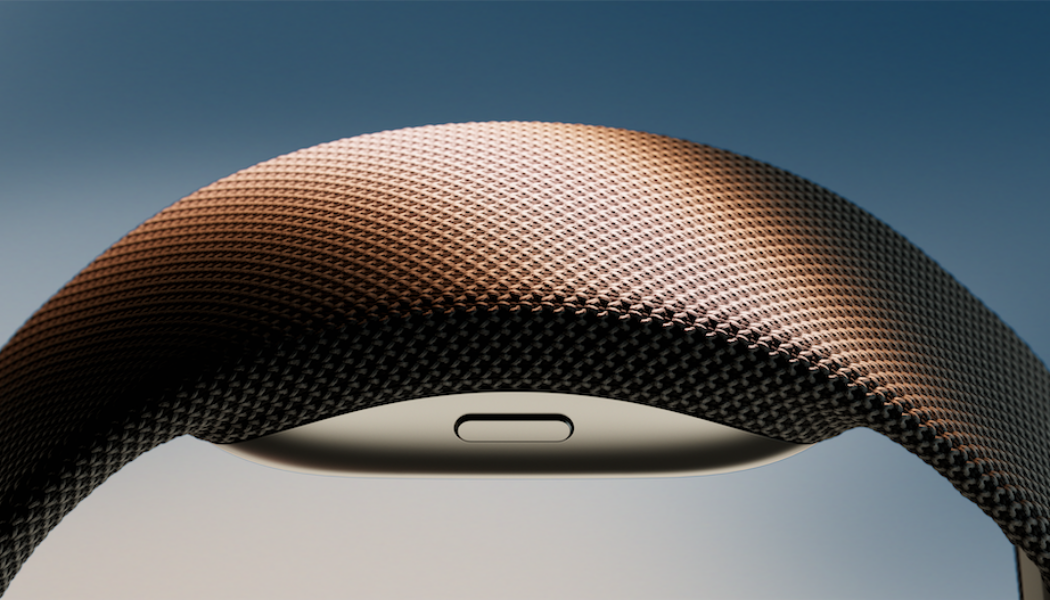

Meta’s rumoured Malibu 2 smartwatch could finally make wearables accessible

Meta is reportedly developing a smart fitness watch, internally codenamed Malibu 2. At first glance, this may sound like just another entrant into an already crowded wearable market dominated by Apple, Samsung, and Garmin. Fitness tracking, heart rate monitoring, notifications — these are well-established features. But Malibu 2 could represent something far more significant. If Meta integrates its emerging neural interface technology into a smartwatch, it could solve one of the most fundamental accessibility failures in modern wearable devices. For many severely disabled people, today’s smartwatches are not accessible at all. The invisible barrier: wrist raise The problem is deceptively simple. Every mainstream smartwatch relies on physical gesture. To wake the screen, interact with notifi...

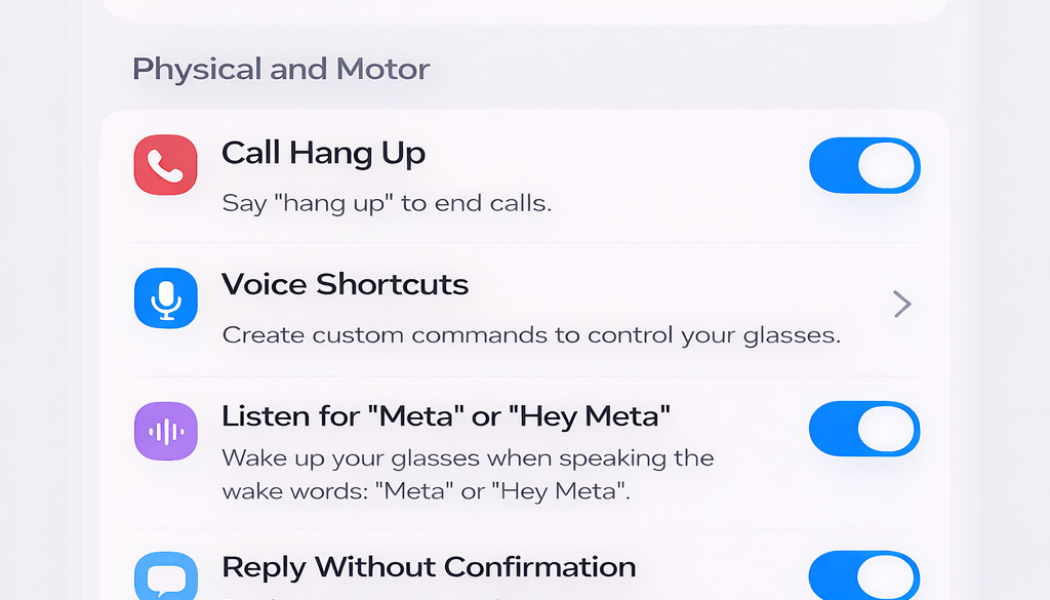

Why the Meta AI app needs a physical and motor disability section for the company’s smart glasses

Ray-Ban Meta smart glasses have been life-transforming for me and for many others with severe physical disabilities. As the company’s smart glasses, they already show how powerful voice-first, wearable technology can be by removing the need to reach for a phone, press buttons, or interact physically with hardware. For me, that’s already meant being able to take my own photos and videos hands-free, using my voice alone. No phone to hold, no shutter button to press, no need to ask someone else. It’s a small interaction, but a deeply liberating one, and a clear example of what voice-first technology can make possible when physical barriers are removed. That level of independence already exists in the product itself, which is precisely why the limitations of the Meta AI app matter so much. An ...

EMG, smart homes and personal safety: a use case worth considering

Earlier this month at CES 2026, Meta announced a new research collaboration with the University of Utah exploring EMG smart home control and how consumer-grade EMG wrist wearables could support people with different levels of hand mobility. Using the Meta Neural Band, the research will examine how electrical signals generated by muscles at the wrist can be translated into digital input. Importantly, this work is not limited to navigating smart glasses. The stated aim is to explore how custom EMG gestures could be used to control everyday devices such as smart speakers, blinds, locks and thermostats. As part of this work, the University of Utah team is also testing the precision of EMG input by using the wristband to steer the TetraSki, an adaptive ski designed for people with complex physi...

A new smart lock hints at what accessibility-first smart homes could become

The arrival of a new generation of hands-free smart locks, such as the Aqara U400, is one of those moments where a feature framed as convenience quietly reveals something much more important. Using Ultra Wideband (UWB) and Apple Home Key, the lock can unlock automatically as you approach with an iPhone or Apple Watch. It represents a shift toward Zero-UI interaction: there is no tapping, no Face ID prompt, no voice command, and no app interaction. You simply arrive at your front door, and it opens. For some people, that’s a nice quality-of-life upgrade. For others, it’s a glimpse of what truly accessible smart homes could look like. Why hands-free automation matters for accessibility Smart home accessibility is still too often reduced to voice control. Voice assistants have undoubtedly hel...

Meta visited me to test the Meta Ray-Ban Display glasses and Neural Band

Meta brought the future to my doorstep Two weeks ago, something quite extraordinary happened. Meta Reality Labs visited my London home to let me try the new Meta Ray-Ban Display glasses — the first model with a built-in display — bundled with the Meta Neural Band wristband. Officially released in the US on 30 September 2025, and priced from $799, the devices aren’t yet available in the UK— so having them brought directly to me felt both a privilege and an honour. I live with a muscle-wasting condition called muscular dystrophy, which means I have very limited movement in my fingers, wrists, and arms. Everyday interactions most people take for granted — like pressing a button or tapping a touchscreen — can be physically impossible for me. That’s why innovations like the Neural Band are so f...

Apple confirms Voice Control bugs in macOS 26

Apple has acknowledged a series of bugs affecting Voice Control in macOS 26, the company’s built-in accessibility feature that allows people to fully operate a Mac by voice. Over the past week, users have reported that several key commands are not working as expected, with some issues seriously impacting productivity. After a detailed bug report was submitted, Apple’s accessibility team confirmed it is aware of the problems and has passed them on to engineering for investigation. The bugs The issues confirmed by Apple include: • “Delete that” command malfunctioning — instead of deleting the last dictated phrase, the command only removes the final character. • Invisible cursor in Mail — while dictating, the text caret disappears halfway through a line, making it impossible to see where edit...

Why Meta’s Ray-Ban Display glasses feel like the next step for accessibility

This line from Meta’s announcement of the new Ray-Ban Display glasses with Neural Band really struck me. “Think of the potential impact it could have for people with spinal cord injuries, limb differences, tremors, or other neuromotor conditions.” I haven’t tried them yet — they don’t launch until 30 September, and only in the US and select stores at first — but as someone with very limited upper limb mobility, I can already see why this feels different. Beyond another wearable The first generation of Ray-Ban Metas already brought me into the fold. For the first time, I could wear glasses that looked like glasses, but also gave me voice control, hands-free photo and video capture, calls, messaging, and AI assistance. That was a step towards independence. But wrist wearables from Apple, Goo...

WWDC 2025: accessibility gains, missed chances, and what Apple still needs to fix

Yesterday’s WWDC 2025 keynote brought Apple’s bold new Liquid Glass design, system-wide renaming (e.g., iOS 26, macOS Tahoe 26), and a big push for on-device Apple Intelligence—available now in eight more languages, and open to developers everywhere . We also saw major updates to iPad multitasking, Spotlight, Xcode 26 with foundation LLM support, and even lighter Game controls thanks to Liquid Glass‘ fresh UI . ✅ Notable accessibility updates Announced via press release last month, Apple quietly introduced a number of genuinely welcome updates available now to try in the betas released after the keynote: Live Captions on Apple Watch: real‑time captioning during calls via Live Listen mic, controlled remotely from the Watch . Voice Control improvements: developers can now use Voice Control...

WWDC 2025: Can Apple finally fix Voice Control?

As WWDC 2025 approaches, rumours swirl about what Apple may unveil: enhanced AI features, a new naming convention for all the operating systems, and a visual glass-like overhaul, including round Home Screen icons. There’s also speculation about improvements to Apple Intelligence—a brand that, despite the hype, hasn’t exactly set the world on fire. But for disabled people like me, all eyes are on something less flashy but far more consequential: Voice Control. Voice Control today: clever, capable—and still flawed Apple’s Voice Control app, first introduced six ago in macOS Catalina, allows hands-free control of a Mac, iPhone, or iPad. It was a game-changer for many disabled people, particularly those with motor impairments who rely on dictation and voice navigation. But its limitations are ...