In the year Apple has released its most significant accessibility initiative ever it may not be popular to express the idea that in some areas Apple is failing its most severely disabled consumers but it has to be said.

Despite the introduction of Voice Control, the company’s new voice recognition tech, Apple has fallen short when it comes to providing comprehensive access for users with certain physical motor conditions whose only option is to control their Apple devices with their voice.

I am quadriplegic, as a result of muscular dystrophy, which means I have difficulty using the iPhone screen, Apple Watch face, and MacBook keyboard for writing an email, sending a message, posting to Facebook and Twitter, or controlling my smart home. Rather than typing on to a screen or keyboard, as many people do, I rely on voice recognition tech to get things done.

After several months of new product and operating system releases, including iOS 13, macOS Catalina, Watch Series 5, iPhone 11 and Airpods Pro, Apple has come to the end of this year’s release cycle and these are my conclusions when it comes to how extensive and effective voice access is on devices such as the iPhone, Apple Watch and Mac computer.

I am literally pulling my hair out with frustration every day, (if only I was able to), as I struggle to write an email, send a text message, hang up a phone call, and post to Twitter and Facebook. It so doesn’t have to be like this.

As Apple puts voice control centre stage a light needs shining on some significant shortcomings in speech recognition tech across the company’s operating systems and devices. Here is my rundown of the changes and improvements the company needs to make to its voice tech strategy if it is to offer a truly inclusive, joined up voice experience for everyone.

Voice Control

Apple made a lot capital out of the release of Voice Control as it was warmly welcomed when it was unveiled with a moving film of it in action at its Worldwide Developers Conference keynote in June. The mainstream technology media reported it extensively and have been overwhelmingly uncritical probably because most reporters will never need to rely on the technology to do their jobs.

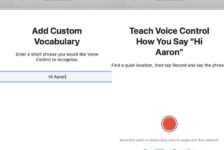

It is designed for people who may not be able to use traditional methods of text inputs on a Mac computer, iPad, or iPhone and it has two main functions; allowing people to dictate emails or messages with their voice, and navigate their screen with commands such as “open Safari” and “quit Mail”.

Voice Control is good for navigation by speech commands but hopeless at accurate dictation, which makes it frustrating and not at all productive to use. It’s a sad indictment that I, as a quadriplegic, do not use it all. It would take me several hours if I tried to dictate this article with Voice Control dictation as the software repeatedly fails to convert my spoken words into text on the screen correctly. It doesn’t come close to Dragon Professional, the leading speech recognition software on the market, in terms of accuracy, productivity and sophistication. Nuance, who make Dragon, discontinued the Mac version of their product last year meaning Voice Control is really the only speech recognition product available at the moment if you want to dictate on a Apple device.

Some of the failings in dictation are due to the fact that only US English is powered by the Siri speech engine for more advanced speech recognition at the present time. No word as yet from Apple on when UK English will be added. Perhaps, though I can’t be sure, it will improve for me when UK English is added.

Voice Control is a start but needs to develop and grow if it is to be useful for people. It feels like Apple is only at the beginning of developing truly productive voice recognition technology, which will accurately transcribe the words you speak into text on a page. For the company that has a track record for nailing it with almost every new product or application it releases it is a little disappointing to see how mediocre Voice Control dictation is at the moment. It is not the game changer many were hoping for back in June when it was first unveiled to warm applause.

It’s a bit like having The Force

One of the most exciting and useful product releases this year is not an accessibility initiative at all, not in Apple’s eyes as least. It is the second generation AirPods with built in Siri, the company’s upgrade to its ubiquitous and iconic wireless earbuds.

It wasn’t long ago that true wireless earbuds weren’t very good. Solid connectivity was a challenge, dropouts happened all the time, and battery life was dire. Not anymore. Apple changed the game with the release of the first Airpods in 2016 and this year they have changed it again by bringing hands-free Siri to its earbuds.

Access to intelligent assistants like Siri is key to their mainstream consumer appeal now, and for physically disabled users being able to get things done with help from Siri brings a freedom from confinement hitherto unknown. For people who have difficulty handling an iPhone or Apple Watch the hands-free voice capabilities that wireless in-ear headphones are now offering is so liberating.

These wearable on-the-go products let me take Siri and her voice control capabilities with me all day. I can go out alone in my wheelchair and feel secure by being able to make phone calls from my iPhone or Apple Watch with a Siri voice command. I can check the time by asking Siri via the Airpods. I can play my favourite music playlist again by asking Siri. I can send friends and family a text message. In all these small but significant ways Airpods with built in Siri has levelled the playing field and allowed me to interact with my iPhone and Apple Watch almost like anyone else. The benefits for me are both in terms of social interaction, and personal safety.

I also wear the Airpods around my home and use them to turn on connected lights and and set the thermostat. As one friend told me: “It’s a bit like having The Force”. It’s just a pity Apple Homekit does not integrate with more smart home devices such as my electric window blinds and smart kettle.

However, significant rocks in the road remain. Despite the release of two new AirPods this year, second–gen AirPods in March, and now AirPods Pro, you still can’t use a Siri voice command to end/hang up a phone call. How dumb is that. You can place a call hands-free by a voice command but you can’t end it. This gets me into so much trouble day to day, getting stuck in people’s voicemail boxes when they don’t pick up my calls because I am unable to touch the iPhone screen and Apple Watch face to press the red button to end a call. It reminds me of the Blondie song, Siri, why do you keep me hanging on the telephone! If I am unfortunate enough to receive a nuisance or abusive call there is nothing I can do to end it. Come on Apple, this situation can’t be allowed to continue.

It is my understanding you can hang up calls by a voice command with Amazon’s Echo Buds and Google’s forthcoming second-generation Pixel Buds though I am yet to try them.

Announce Messages with Siri is another great new feature in iOS 13 this year that Apple does not bill as an accessibility feature but by enabling anyone with Airpods to have their messages read out to them by Siri automatically, and the ability to transcribe with a reply, it clearly has a lot of use for people who have difficulty interacting with their iPhone screen. I find the feature incredibly useful.

One area where Apple needs to brush up its act is when it comes to providing information that can be useful from an accessibility point of view. When the Airpods Pro were released recently, the accompanying publicity material described the new active noise cancellation feature and how it can be controlled either by pressing a force sensor on the stem of the Airpods, or by the Apple Watch, both of which I unable to do. Nowhere in their description of the product did Apple mention that you can also turn noise cancellation on and off with Siri voice commands. It is really strange that they missed this out as it is so relevant to people like me.

Fortunately, I found an unboxing video on YouTube and the reviewer demonstrated how Siri can turn active noise cancellation on and off. I just can’t for the life of me understand why Apple could not have given that feature a nod at least in their description of the product features. It is actually a selling point for people like me, and for many others I would imagine. I know they can’t put absolutely everything into the publicity but this is one small bullet point, a couple of words even.

This is what Apple said on their website publicity: “Want to hear what’s happening around you? Just press and hold the force sensor on the stem to jump between Active Noise Cancellation and Transparency mode” All the company had to add was, “or just ask Siri”, something like that.

Hands-free control of active noise cancellation is great news. I just feel the company could be doing more to inform disabled users of all the features that can be helpful.

Auto-Answer

Auto-answer is a little known feature buried away in the accessibility settings on iPhones that enables phone calls to be answered automatically. It is really useful for people who cannot easily reach for their iPhone and touch the green answer icon on the screen when a phone call comes in.

But the problem is that for the people who rely on it as the only option for handling phone calls, you still can’t toggle Auto-Answer on and off by a Siri voice command, or create a Siri Shortcut where, every time you put your AirPods on, phone calls are answered automatically, and every time they are taken off they are not. This would be incredibly useful for people in my situation. Many other accessibility features can be activated by a Siri command but, after a year of feeding back to Apple, my feature request for this has fallen on deaf ears at the tech giant.

Apple Watch

The Apple Watch cellular needs the Auto-Answer phone calls feature added; the ability to toggle it on and off by a Siri command; and the ability to hang up a phone call by a Siri voice command.

I have been reading of someone who would like Auto-Answer on an Apple Watch for an elderly family member with dementia who does not remember to press the green button on the iPhone or Apple Watch to answer a phone call in the conventional way. The writer says Auto-Answer would be amazingly helpful for people who need to keep track of elderly loved ones who don’t like the idea of carrying a cell phone, and refuse to wear GPS devices for wanderers, but are still willing to wear a watch.

For me, with a physical motor disability, Auto-Answer on the Apple Watch will mean more independence and personal security. I will be able to leave my home in my wheelchair without the need to pick up my iPhone, (which I am unable to do), and I’ll be able to respond to incoming phone calls from friends, family and carers.

If Apple can provide these features on the Watch they will offer a more enhanced, approaching holistic, voice control experience. Until they do there are huge gaps in their voice offering on the Apple Watch, which are really curtailing my independence, and many people like me.

My hunch is there aren’t any huge technical or financial barriers to adding these features. During the summer there was an outcry for Siri control of Dark Mode and Apple duly obliged. Accessibility isn’t sexy, may not shift units, Auto-Answer is a little known feature buried deep in accessibility settings, so getting the company to focus on it is proving difficult.

More inclusive

Voice Control is a joint effort between Apple’s Accessibility Engineering and Siri groups. Its aim is noble – to transform the way users with physical disabilities access their devices. You talk, it gets things done for you.

Yet on a broader philosophical level Apple chose to bring in Voice Control as a dedicated accessibility feature, but it could have done very similar things by expanding the capabilities of Siri so voice control of devices could be for everyone. This would be more inclusive and what I would have liked to have seen.

There is a danger Voice Control could become a bit of a ghetto, not used by many, not updated and improved often, except on the odd occasion when Apple wants to show off its accessibility credentials.

The future of voice recognition features, wireless in-ear headphones, and mobile voice assistants will only grow. Wearables are a potentially huge technology sector, which Apple and its competitors clearly recognise. It is not just people with accessibility issues that will be driving growth and calling for ever greater voice capabilities, there is a growing appeal from general consumers as the voice first revolution grows.

Sarah Herrlinger, Apple’s head of accessibility, has said: “When you build for the margins, you actually make a better product for the masses”. She is right. Make things easier for people like me to use and you make things easier and more convenient for everyone. That is a strong selling point these days.

Hopefully, in the coming year we will see Apple address these gaps in its voice tech to the benefit of everyone who wants to control their devices with their voice.

David

Hi you probably know this already; but HomePod Mini can do voice call hang up now. It’s ok – takes a couple of goes sometimes to enact the command; but it does work, cheers, David