Autumn has been a busy period for Apple. Following a summer of beta testing it released major updates to its operating systems, including iOS 15, watchOS 8, and macOS Monterey.

The company also released a bunch of new hardware, including iPhone 13, Apple Watch Series 7, AirPods 3 and new Apple Silicon MacBook Pro laptops.

Over the past few weeks, I have managed to get my hands on the new iPhone 13 Pro, Apple Watch Series 7, and AirPods 3 and have been trying them out along with iOS 15 and watchOS 8. Here’s my experience of how accessible Apple gear is in 2021 for anyone who uses a wheelchair and has limited use of their hands.

Auto-answer

A new option to have calls answered automatically with the introduction of a auto-answer feature on the Apple Watch cellular has been one of the standout accessibility developments for me this fall.

Over the past few years I have been calling on Apple to bring auto-answer to the Apple Watch cellular for people who have problems touching the Watch screen. Its been available on the iPhone for several years so its omission from the Apple Watch cellular, which is a wrist-worn phone, always seemed strange. I turned on the feature recently on the new Apple Watch 7 and soon received a phone call. My disability means I can’t touch the Watch screen but auto-answer kicked in and it meant I didn’t need to touch or press anything to answer the call. This new functionality brings a level of convenience, security and accessibility that’s so important to disabled people with upper limb differences.

However, there is still no option to enable auto-answer with your voice so a feature designed for people who can’t touch the screen, still requires you to touch the screen to turn it on and off! It’s long been the same with auto-answer on the iPhone. Surely this nonsensical situation can’t be allowed to prevail for much longer.

I would like users to be able to turn auto-answer on and off by Siri voice commands, “hey Siri turn on/off auto answer,” and by setting up various Siri Shortcuts. For example, turn on auto-answer when I put the watch on my wrist, when I leave home, or at a certain time.

There also appears to be a bug with the way auto-answer is implemented on the Apple Watch: it remains active when the Watch is on its charger rather than your wrist. This shouldn’t happen. Like many, I charge my Watch by my bed, so it gets topped up for sleep monitoring. It’s a bit unnerving to have calls automatically answered when the Watch is off my wrist and in a bedroom.

Announce Calls and Announce Notifications

iOS 15 introduces a new Announce Notifications feature for Siri. It builds on the “Announce Messages” and “Announce Calls” features giving Siri the ability to announce notifications from first- and third-party apps.

Whilst not a dedicated feature for disabled people it has huge benefits for accessibility and means a more seamless experience for hearing and responding to alerts without having to pick up and unlock your device or say “Hey Siri.”

Here’s how Apple describes the new functionality:

“Have Siri read out notifications without having to unlock your iPhone when 2nd generation AirPods and some Beats headphones are connected. Siri will avoid interuppting you and will listen after reading notifications so you can reply without saying “Hey Siri.”

The new and improved functionality has been massive for me. Every day I am answering calls effortlessly, hands-free by just saying the word “Answer”. Unless I had auto-answer on, (which unfortunately still requires me to ask someone to switch it on for me), I was never able to answer calls. This really increases independence for people with upper limb differences.

It’s also been great to have notifications from third-party apps like WhatsApp and Facebook Messenger read out to me while wearing AirPods for the first time. As someone who can’t pick up and unlock my iPhone to read messages and notifications this new functionality makes me feel connected like never before. I’ve dealt with important WhatsApp messages and Outlook emails, and more, hands-free, responding and actioning important things promptly, with only what I’m hearing in my ears through my AirPods. This is really liberating and productive.

Siri

Siri, Apple’s often denigrated voice assistant, is improving and widening accessibility opportunities within the home for disabled people. Using HomePod Mini, iPhone or Watch I can control more of my smart home devices with my voice, including my front door allowing me to get in and out of my flat independently with the help of Siri, “Hey Siri, open the door”.

However, despite all this amazing progress there is still room for improvement when it comes to accessibility on Apple devices.

Phone calls

You still can’t hang up a phone call on the iPhone or Apple Watch with a Siri voice command, “Hey Siri hang up”, which causes me problems most days as I can’t press the red button to end a phone call. The good news is I feel confident that Apple is listening to this gap in provision and a solution is coming and it’s now a question of when, not if.

AssistiveTouch for Apple Watch

When I got the Apple Watch Series 7 one of the first features I was excited to try out was AssistiveTouch for Apple Watch, which Apple announced in May as coming to the Apple Watch series 6 and 7 this autumn. It’s a feature specifically designed for people like me with limited upper limb mobility. Here’s what the company has to say about it:

“AssistiveTouch for watchOS allows users with upper body limb differences to enjoy the benefits of Apple Watch without ever having to touch the display or controls.

Using built-in motion sensors like the gyroscope and accelerometer, along with the optical heart rate sensor and on-device machine learning, Apple Watch can detect subtle differences in muscle movement and tendon activity, which lets users navigate a cursor on the display through a series of hand gestures, like a pinch or a clench. AssistiveTouch on Apple Watch enables customers who have limb differences to more easily answer incoming calls, control an onscreen motion pointer, and access Notification Centre, Control Centre, and more.”

After setting up the Apple Watch 7 I soon discovered I am unable to activate AssistiveTouch with the limited muscle power in my arms and hands. It appears I just don’t have enough physical movement to trigger the feature into action. It’s made me question who Apple designed AssistiveTouch for because in theory it should be ideal for people like me. I have just enough to arm and wrist arm movement to wake the Watch screen and clench my fist but much of the technology relies on users being able to lift their wrist and look at the screen, and wrist movement and muscle power to do things, which unfortunately I don’t have.

AssistiveTouch is an interesting and smart idea but its implementation falls short of what people with severe upper limb disabilities need. Hopefully, the company will improve the technology and extend access.

Voice dictation

Voice dictation on desktops and laptops, both Apple and Windows, isn’t in a great place right now. My productivity hangs by a thread thanks only to Dragon Professional, the Dragon extension for the Firefox browser, the Dragon addin for Outlook, and being able to run Dragon Professional with Parallels Windows virtualisation technology on my MacBook.

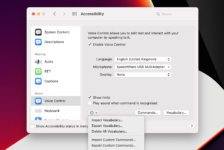

Sadly, voice dictation on the Mac performs poorly when dictating large amounts of text. Voice Control, Apple’s speech recognition app, hasn’t improved much this year and is only good for dictating short, often error ridden, sentences or two. You couldn’t write a 1000-word blog article, run a business, or write a dissertation with its dictation capabilities. It would take you hours of frustration compared to Dragon Professional.

As an Apple user I am looking enviously at what Google is doing with speech recognition on the new Pixel 6 phone with the Tensor chip. Although I am yet to try it, it’s the advanced speech recognition I would like to see Apple provide users who rely on voice dictation on the Mac and iPhone for work, education and staying connected.

Hopefully, in 2022 we will see Apple improve the accuracy of dictating text, and the sophistication of editing capabilities, on all its devices you can dictate and control with your voice. At the moment the company is being left behind by the voice capabilities of Google and its new Tensor chip.

Access to technology and communication is a human right and for some voice dictation is the only means to communicate with the world and do grown up things that go much further than dictating “happy birthday” with a cute emoji. Disabled people who rely on voice access deserve better than that and I believe the big tech companies can and should do more. It’s not just Apple, I have tried Windows new Voice Typing tool in Windows 11 and its similar to the limited dictating capabilities of Voice Control.

Face ID not working with CPAP masks

Apple’s facial recognition tech worked perfectly when wearing my CPAP mask all the way from the iPhone X four years ago up to last year’s iPhone 12 but has stopped working with the more compact notch tech in the iPhone 13.

It seems hardware, and software changes Apple made in response to Covid mask wearing, may have had an unfortunate side effect for those of us who wear CPAP masks.

I have been using unlock with Apple Watch, a iOS feature introduced as a result of the Covid pandemic that helps you unlock your iPhone if you are wearing a face covering. However, the functionality is a £500 workaround, (you need an Apple Watch to use it), to a function that worked previously and has shortcomings because it won’t work with banking apps; you still need to put your passcode in for those. Face ID’s failure to recognise me when wearing my CPAP mask with the iPhone 13 Pro is a major step backwards for accessibility on the iPhone.

Wrap up

When it comes to accessibility generally there is a lot to be thankful for with Apple‘s 2021 releases but clearly there is some work still to do if the company’s devices and software are going to be fully accessible for disabled people with severe upper limb differences.

To be fair to Apple, it does more than many when it comes to accessibility and there were several accessibility-related features introduced this year for a range of impairments, including hearing, vision and physical disabilities. I am very much hoping Apple engineers will iron out the above flaws, and related shortcomings, in the coming year.

Ultimately, what is needed to solve the problems highlighted here is a shift in the way software and hardware development is approached, and not only by Apple. My own view is if every tech company started with inclusive design even more than they are already doing we will be in a better place.