Meta is struggling to retain users for its Ray-Ban Stories smart glasses, with over 90 percent of consumers having abandoned the platform, according to a report by The Wall Street Journal.

Launched in September 2021, for some at least the integration of the smart glasses into our daily lives has brought a new dimension of connectivity and convenience. With the partnership between Meta and Ray-Ban, the Ray-Ban Stories smart glasses have already made a remarkable impact on my life allowing me to take photos and videos for the first time.

However, there is always room for improvement and with a second generation of Ray-Ban Stories rumoured to still be in the pipeline, despite the current model’s low user retention, here are five innovative ways in which Meta can enhance the Ray-Ban Stories smart glasses, making them even more user-friendly and versatile.

Do Not Disturb mode activated by voice command

One of the most useful aspects of Ray-Ban Stories is their integration with messaging services like Meta-owned WhatsApp and Facebook Messenger allowing users to listen to incoming messages, and reply hands-free.

However, there are times when it’s important users remain undisturbed when engaged in important activities. By implementing a voice-activated “Do Not Disturb” mode, Ray-Ban Stories can intelligently identify when the user is occupied and automatically filter out incoming messages. This way, users can stay focused and uninterrupted during critical tasks, whether it’s during a meeting, exercise routine, or any other activity where notifications may prove disruptive.

Longer message playback with Facebook Assistant

The current limitation of reading out only short messages of 10-16 words, or 90-93 characters, can be a hindrance to a seamless hands-free user experience, with Ray-Ban Stories users forced to open their phone to access longer messages.

To address this roadblock, Meta should optimise the Facebook Assistant to read out longer messages without the need to open your phone. By doing so, users can stay informed without needing to access their mobile devices while wearing the smart glasses, making communication more efficient and hands-free.

Verbally dictate emoji for enhanced messaging

Communicating emotions can be challenging, especially when relying solely on text-based messages. By allowing users to verbally dictate emojis, Ray-Ban Stories should be able to interpret and display corresponding emojis in a recipient’s messages. This feature would add a touch of personalisation and emotional expression to interactions, making conversations more lively and enjoyable for both parties.

One of my biggest annoyances at the moment is the way the Stories insert a full stop at the end of a short dictated message It makes communication appear blunt, curt and lacking in empathy.

Silent photo and video capture with a smart button

In social situations like a small party or gathering, capturing the perfect moment with the camera on the Ray-Ban Stories is essential, but verbal commands like “Hey Facebook take a photo ” might not always be appropriate or discreet.

There is the option of clicking a button on the arm of the Stories but in a nod to accessibility, and users who can’t move their hands up to the arms of the glasses, Meta should integrate the Ray-Ban Stories with an existing smart button like the Flic 2 Button to trigger the taking of a photo or video. If the company wanted to be especially futuristic they could develop photo captures by facial expression with on-board sensors built in to the frames of the Stories.

This discreet photo capture feature would ensure that users of all abilities can seize the moment without drawing unnecessary attention or disrupting the ambiance or emotion of the environment and moment.

Last week, I captured this emotional moment with the Ray-Ban Stories.

It was the moment after almost four years I got to see my mum and dad, 85, face to face again. The three of us are vulnerable to Covid and have other issues that prevented us from having this much needed catch-up in London until now. I was only able to capture this event with the help of voice commands on the Ray-Ban Stories as I am unable to take videos and photos myself any other way due to my disability. Whilst I am grateful I can now do this it would have been good to initiate the video silently with the click of a smart button rather than a verbal “Hey Facebook take a video” voice command.

Better smartphone integration

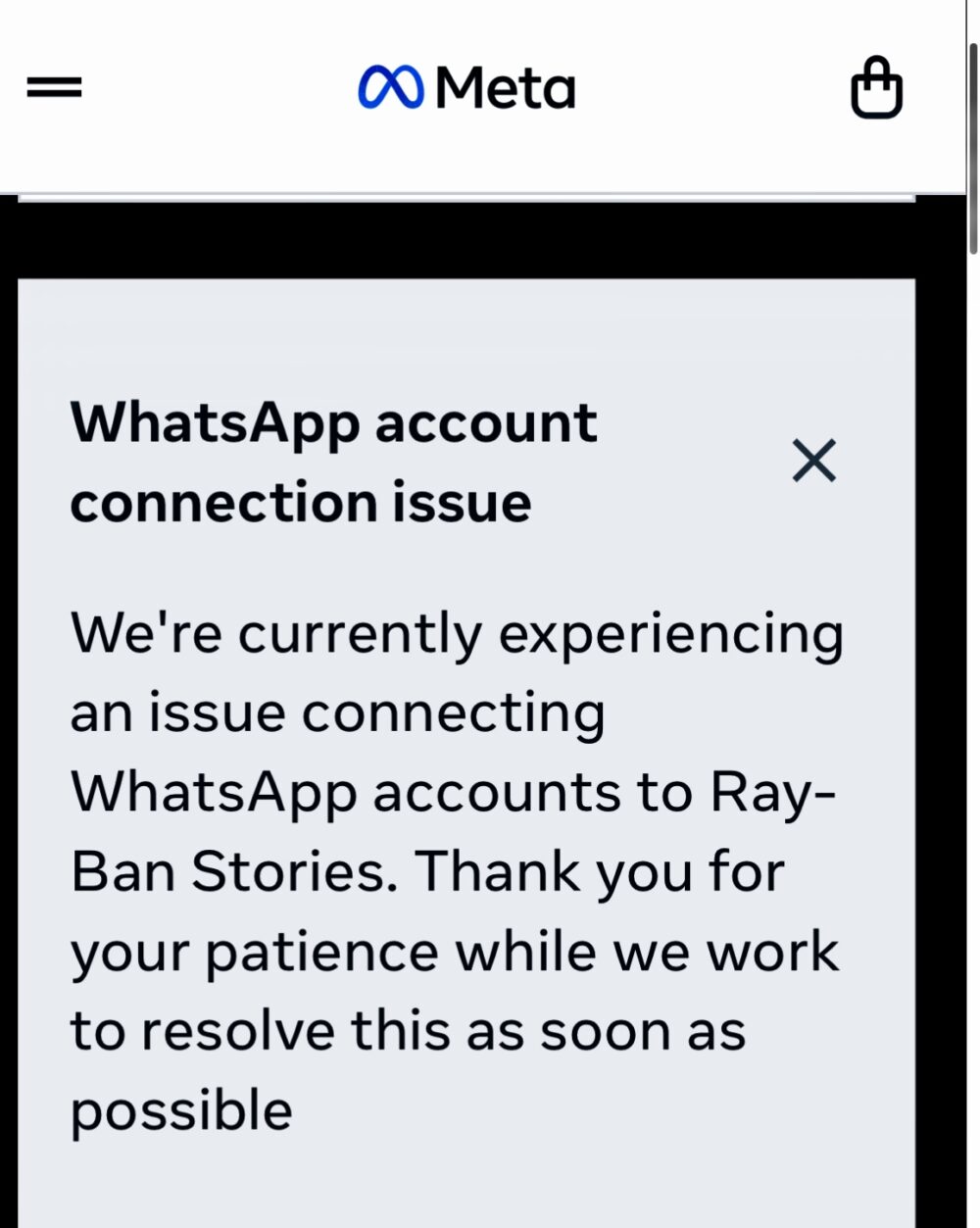

According to the Wall Street Journal various technical issues impacting Meta’s Ray-Ban Stories are said to have contributed to poor user experiences and people abandoning the smart spectacles.

The issues include problems with audio, voice commands, poor battery life, and importing media from other devices,

At the moment, users are experiencing an issue connecting

WhatsApp accounts to Ray-Ban Stories, which is surprising as Meta own both platforms.

A lot of these issues relate to the lack of solid Bluetooth and WiFi connections between the glasses and the accompanying View app, and Meta not having its own smartphone to offer. If it did make a phone the company could do much more to ensure reliable integration, connectivity and performance. As it is, Meta is in the hands of phone manufacturers like Apple, Google and Samsung for ensuring a good user experience.

If Meta wants Ray-Ban Stories to succeed I urge the company to double down on software and smartphone integration ensuring a reliable connection when messaging, and downloading photos from the Stories to the View app on a phone.

The company should also improve the camera on the glasses and give users an easy way to message the last photo they have taken with the smart glasses to a friend with a voice command “Hey Facebook send my last photo to Nancy”.

Conclusion

Meta is under pressure with only 27,000 of the 300,000 units reportedly sold between September 2021 and February 2023 still being regularly used each month. However, the company still has an opportunity to elevate these smart glasses to new heights by implementing these suggested improvements.

The addition of a voice-activated “Do Not Disturb” mode, extended message playback with the Facebook Assistant, emoji dictation, smart button integration for accessibility and silent photo capture would make Ray-Ban Stories an even more indispensable tool for daily life.

By combining style with functionality, Meta can solidify Ray-Ban Stories’ position as a leading smart glasses product, catering to the needs of all types of users in various scenarios. As we eagerly await future updates and innovations, these five enhancements could mark the next exciting chapter in the evolution of smart eyewear, revolutionising how we stay connected and experience the world around us.

Whilst obviously a first generation product with understandable limitations the Ray-Ban Stories have been a game changer for me allowing me to message hands-free, and take photos and videos for the first time. To boost sales and interest perhaps the company should play up the accessibility potential of the glasses something it doesn’t at the moment. For the way the glasses open up endless possibilities for me I would be sad if we didn’t see a new and improved second generation of the Stories.

If you have Ray-Ban Stories what improvements would you like to see in a second generation of the smart specs? Let us know in the comments.