Apple’s press release for the new M3 MacBook Air launched this week described the machine as the “world’s best consumer laptop for AI.” The claim comes ahead of WWDC 2024, when the tech giant is expected to announce a raft of new artificial intelligence features across all of its platforms.

Apple CEO Tim Cook has teased the company’s plans for new artificial intelligence (AI) announcements twice this year. Last month, he said Apple will share details on its AI work “later this year”, and during a shareholder meeting last week, Cook said that Apple will “break new ground” in generative AI this year.

Faced with stiff competition from Microsoft, Google, and OpenAI, there is no doubt that Apple is working on a variety of new AI features for later this year, including a more powerful version of Siri.

All this talk of AI has got me thinking about the implications of artificial intelligence for disabled people’s access to technology generally, and more specifically what it could mean for Apple’s flagship accessibility application Voice Control.

According to the World Health Organisation, with an ageing global population and a rise in noncommunicable diseases, an estimated 3.5 billion people will need assistive technology by 2050. No surprise then that accessibility has become a big deal now, with drives to ensure that all users, regardless of their abilities, can benefit from the digital revolution.

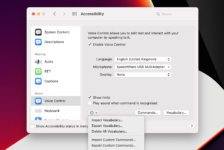

Apple’s Voice Control is a testament to this effort, offering a hands-free way for users to interact with their devices. However, despite its innovative approach, Voice Control is not without its flaws. This article delves into how AI could be the key to refining and enhancing this vital tool for disabled people.

The current state of Voice Control

Apple’s Voice Control app is a powerful and innovative feature that allows users to operate their iPhone, iPad, or Mac without the use of their hands. It was unveiled at WWDC in 2019 with great fanfare and promises of breakthrough features to help disabled people better use all the features on iPhone, iPad and Mac. The app certainly excels at navigating devices with your voice as well as dictating short messages like “happy birthday” or “I will be home in 15 minutes”.

However, five years on from that unveiling, Voice Control has been shown to have several shortcomings that frustrate and disappoint many users, especially those with severe physical disabilities who can’t take to the keyboard to clear up frequent recognition glitches.

Failings plaguing Voice Control users include:

- Accuracy of dictation

Voice Control often makes basic errors in dictated text. For example, “for” is often confused with “four”, and the verb “will” is confused with the man’s name “Will”. These constant glitches leave users with a frustrating dictation experience. The application does not learn from mistakes like these so they keep happening over and over.

2. Lack of basic operations

Voice Control does not support some common tasks that keyboard and mouse-equipped users take for granted. While you can dictate into any app, you can only edit text in certain apps. Ideally, Voice Control should work everywhere. More recently, in macOS Sonoma, the command “new paragraph” often isn’t recognised.

3. Proper noun and grammar frustrations

Voice Control struggles to recognise and handle proper nouns correctly, such as names, places, or organisations. Proper nouns are often disregarded when it comes to capitalisation. Adding proper nouns to custom vocabulary with capital letters doesn’t solve the problem.

For severely disabled people, being able to dictate accurately can make or break a day. It’s much more than a convenience – voice dictation technology is a line to the outside world, including interacting with friends and loved ones on messaging apps, personal emails and administration, and posting on social media.

The AI solution

AI has the potential to address these issues head-on, transforming Voice Control into a more intuitive and reliable assistant. Here’s how AI could make a difference:

1. Enhanced dictation with natural language processing (NLP)

NLP could significantly improve dictation accuracy. By understanding context and user intent, NLP can provide more accurate transcriptions, even in noisy environments or with complex vocabulary.

2. Adaptive command functionality through machine learning (ML)

ML algorithms can learn from a user’s interactions, tailoring commands and responses to individual needs. This personalised approach could simplify tasks making the app more user-friendly.

3. Improved proper noun recognition with AI

AI can assist in recognising and interpreting audio data, and could lead to better handling of proper nouns, ensuring names, organisations and places that matter to you are always understood correctly.

4. Predictive text and autocorrection

AI can offer improved predictive text options and autocorrect errors in real-time, streamlining communication and reducing the need for corrections.

5. Context-aware assistance

By analysing the user’s previous commands, AI can provide context-aware assistance, anticipating needs and offering relevant options. This will contribute to an improved and more intuitive voice dictation experience.

6. Seamless integration with other apps and services

AI can enable Voice Control to work harmoniously with other apps and services, providing a cohesive experience across the Apple ecosystem.

7. Multilingual support

AI can offer robust multilingual support, allowing Voice Control to cater to a global user base without language barriers.

8. Personalised speech recognition

One of the key ways that AI can enhance Voice Control is through personalised speech recognition. This feature can help people who have non-standard speech, which means they may face challenges in making themselves clear. AI can learn to recognise and transcribe their speech more accurately and naturally, regardless of the factors that affect their speech, such as weak voices, speech impediments, breathing difficulties, or muscle disorders. Google estimates that 250 million people have non-standard speech.

An example of a system that uses personalised speech recognition for non-standard speech is Google’s Project Relate application on Android phones. It uses a two-step training approach that starts with a baseline “standard” corpus and then fine-tunes the training with a personalised speech dataset, demonstrating significant improvements for speakers with atypical speech over current state-of-the-art models.

Conclusion

Shortcomings with Voice Control not only affect the user experience but also the user’s independence and dignity. As someone who relies on the application to dictate, navigate, and interact with my iPhone and Mac via my voice, I can’t help but feel both grateful for its existence and frustrated by its shortcomings.

That’s why I’m excited by the potential of artificial intelligence to enhance Apple’s Voice Control app for accessibility. AI could help improve the app’s accuracy, functionality, and flexibility by:

- Using natural language processing (NLP) to understand and generate speech more accurately and naturally.

2. Using machine learning (ML) to learn from user feedback and preferences and adapt to different scenarios and contexts.

The integration of AI into Apple’s Voice Control app would revolutionise the way disabled people interact with their devices and give dictation the jump in accuracy it desperately needs. By harnessing the power of AI, Voice Control can evolve into a more accurate, flexible, and personalised tool, empowering users to navigate their digital world with ease.

Tim Cook’s comments that Apple will “break new ground” in generative AI this year were intended to excite investors, but it may be disabled users that have the most to gain from the company’s promised AI revolution later this year.

Watch this space!

Share your experiences or thoughts about Voice Control and AI in the comments section.